Abstract

Siamese network-based object tracking has remarkably promoted the automatic capability for highly-maneuvered unmanned aerial vehicles (UAVs). However, the leading-edge tracking framework often depends on template matching, making it trapped when facing multiple views of object in consecutive frames. Moreover, the general image-level pretrained backbone can overfit to holistic representations, causing the misalignment to learn object-level properties in UAV tracking. To tackle these issues, this work presents TRTrack, a comprehensive framework to fully exploit the stereoscopic representation for UAV tracking. Specifically, a novel pretraining paradigm method is proposed. Through trajectory-aware reconstruction training, the capability of the backbone to extract stereoscopic structure feature is strengthened without any parameter increment. Accordingly, an innovative hierarchical self-attention Transformer is proposed to capture the local detail information and global structure knowledge. For optimizing the correlation map, we proposed a novel spatial correlation refinement (SCR) module, which promotes the capability of modeling the long-range spatial dependencies. Comprehensive experiments on three UAV challenging benchmarks demonstrate that the proposed TRTrack achieves superior UAV tracking performance in both precision and efficiency. Quantitative tests in real-world settings fully prove the effectiveness of our work. The code and demo videos are available at https://github.com/vision4robotics/TRTrack.

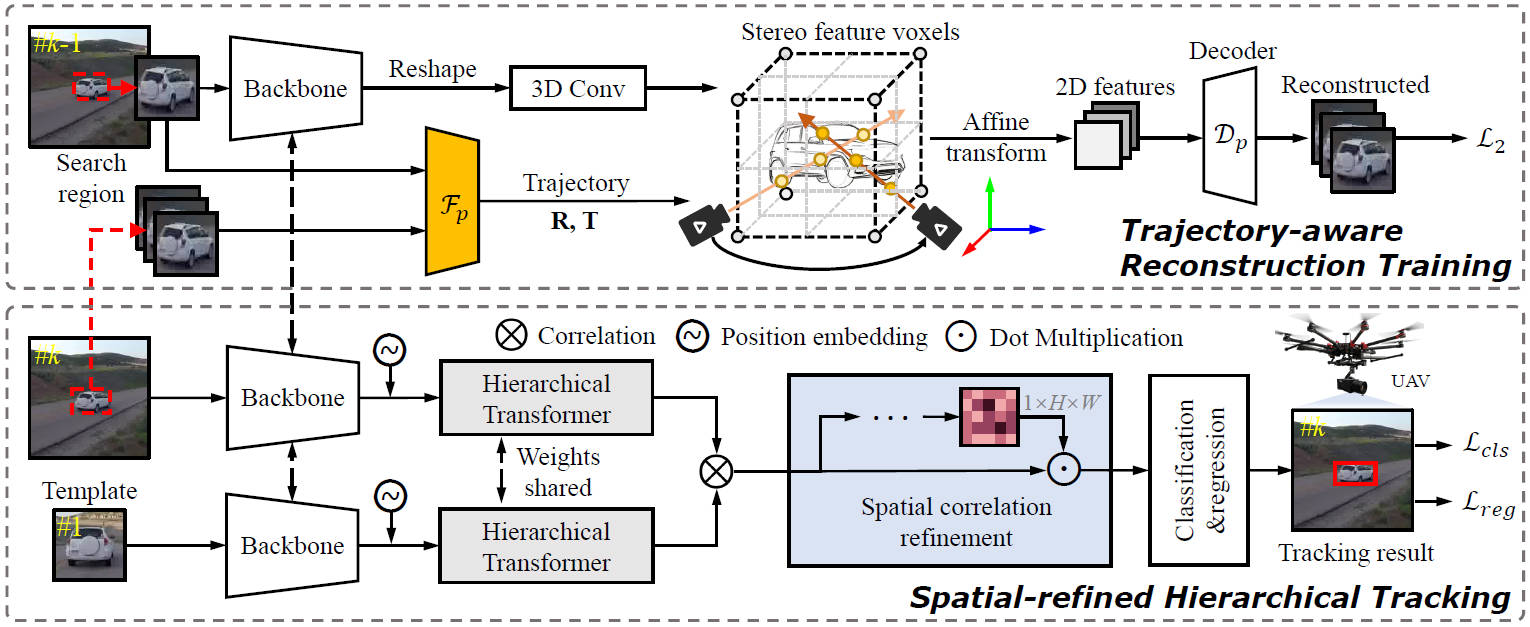

Overview of our TRTrack.

Overview of our TRTrack.