AutoTrack: Towards High-Performance Visual Tracking for UAV with Automatic Spatio-Temporal Regularization

Abstract

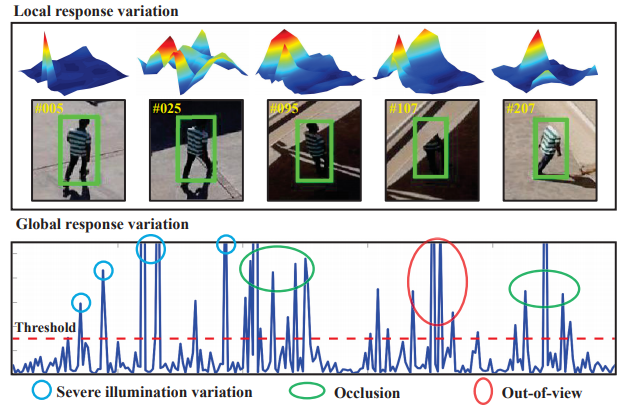

Most existing trackers based on discriminative correlation filters (DCF) try to introduce predefined regularization term to improve the learning of target objects, e.g., by sup-pressing background learning or by restricting change rate of correlation filters. However, predefined parameters intro-duce much effort in tuning them and they still fail to adapt to new situations that the designer did not think of. In this work, a novel approach is proposed to online automatically and adaptively learn spatio-temporal regularization term. Spatially local response map variation is introduced as spatial regularization to make DCF focus on the learning of trust-worthy parts of the object, and global response map variation determines the updating rate of the filter. Extensive experiments on four UAV benchmarks have proven the superiority of our method compared to the state-of-the-art CPU- and GPU-based trackers, with a speed of ∼60 frames per second running on a single CPU.

Our tracker is additionally proposed to be applied in UAV localization. Considerable tests in the indoor practical scenarios have proven the effectiveness and versatility of our localization method. The code is available at https://github.com/vision4robotics/AutoTrack.

Central idea of AutoTrack.

Central idea of AutoTrack.