Abstract

Vision-based aerial tracking has proven enormous potential in the field of remote sensing recently. However, challenges such as occlusion, fast motion, and illumination variation remain crucial issues for realistic aerial tracking applications. These challenges, frequently occurring from the aerial perspectives, can easily cause object feature pollution. With the contaminated object features, the credibility of trackers is prone to be substantially degraded. To address this issue, this work proposes a novel end-to-end decontaminated network, i.e., DeconNet, to alleviate object feature pollution efficiently and effectively. DeconNet mainly consists of downsampling and upsampling phases. Specifically, the decontaminated downsampling network first decreases the polluted object information with two convolution branches, enhancing the object location information. Subsequently, the decontaminated upsampling network applies the super-resolution technology to restore the object scale and shape information, with the low-to-high encoder for further decontamination. Additionally, the pooling distance loss function is carefully designed to improve the decontamination effect of the decontaminated downsampling network. Comprehensive evaluations on four well-known aerial tracking benchmarks validate the effectiveness of DeconNet. Especially, the proposed tracker has superior performance on the sequences with feature pollution. Besides, real-world tests on an aerial platform have proven the efficiency of DeconNet with 30.6 frames per second.

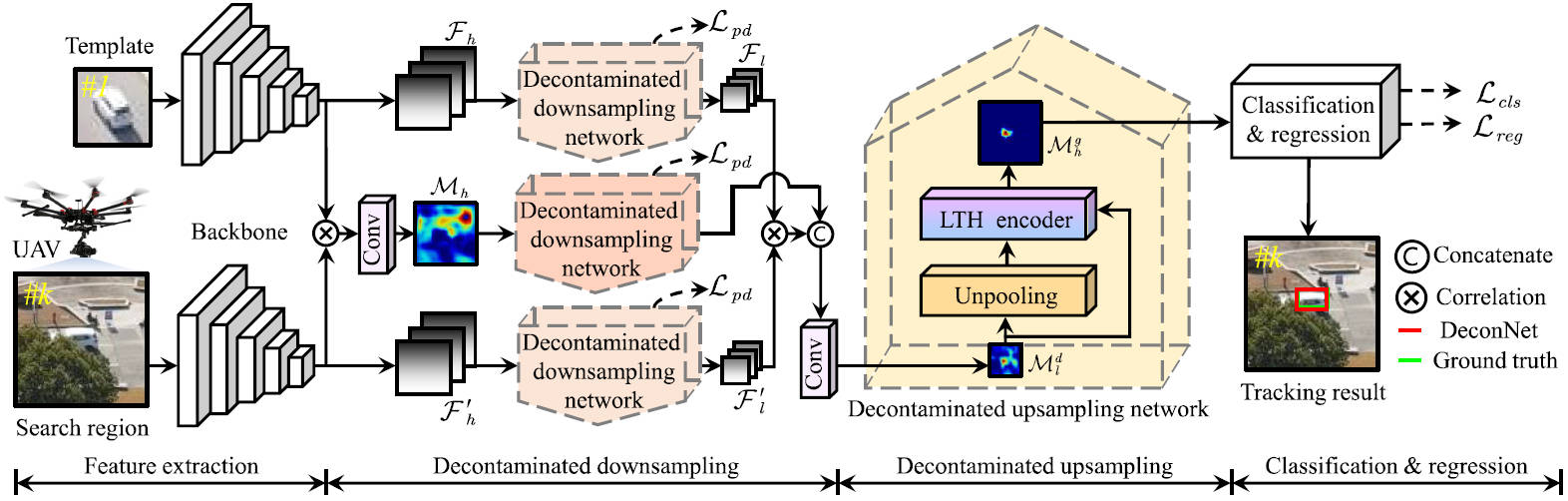

Overview of the proposed DeconNet.

Overview of the proposed DeconNet.