Robust Scalable Part-Based Visual Tracking for UAV with Background-Aware Correlation Filter

Abstract

Robust visual tracking for the unmanned aerial vehicle (UAV) is a challenging task in different types of civilian UAV applications. Although the classical correlation filter (CF) has been widely applied for UAV object tracking, the background of the object is not learned in the classical CF. In addition, the classical CF cannot estimate the object scale changes, and it is not able to cope with object occlusion effectively. Part-based tracking approach is often used for the visual tracker to solve the occlusion issue. However, its real-time performance for the UAV cannot be achieved due to the high cost of object appearance updating. In this paper, a novel robust visual tracker is presented for the UAV. The object is initially divided into multiple parts, and different background-aware correlation filters are applied for these divided object parts, respectively. An efficient coarse-to-fine strategy with structure comparison and Bayesian inference approach is proposed to locate object and estimate the object scale changes. In addition, an adaptive threshold is presented to update each local appearance model with a Gaussian process regression method. Qualitative and quantitative tests show that the presented visual tracking algorithm reaches real-time performance (i.e., more than twenty frames per second) on an i7 processor with 640×360 image resolution, and performs favorably against the most popular state-of-the-art visual trackers in terms of robustness and accuracy. To the best of our knowledge, it is the first time that this novel scalable part-based visual tracker is presented, and applied for the UAV tracking applications.

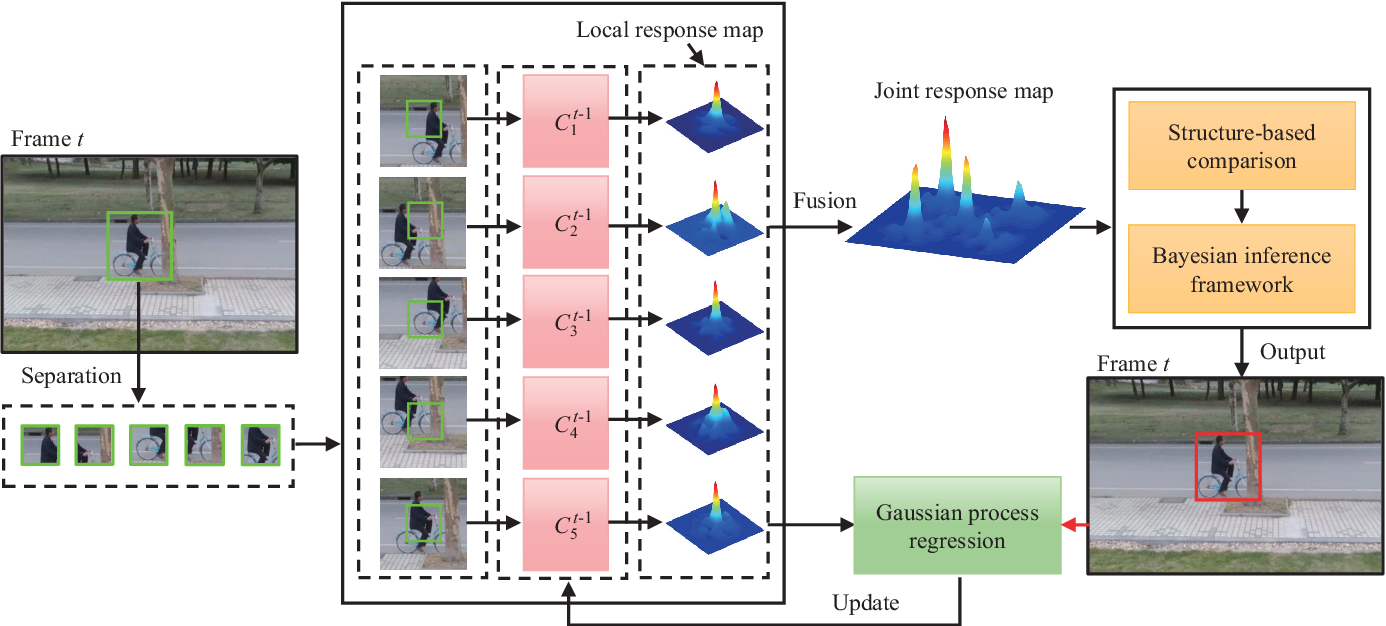

Fig. 1 Main structure of the proposed tracking approach.

Fig. 1 Main structure of the proposed tracking approach.