Abstract

In this paper, a novel online learning-based tracker is presented for the unmanned aerial vehicle (UAV) in different types of tracking applications, such as pedestrian following, automotive chasing, and building inspection. The presented tracker uses novel features, i.e., intensity, color names, and saliency, to respectively represent both the tracking object and its background information in a background-aware correlation filter (BACF) framework instead of only using the histogram of oriented gradient (HOG) feature. In other words, four different voters, which combine the aforementioned four features with the BACF framework, are used to locate the object independently. After obtaining the response maps generated by aforementioned voters, a new strategy is proposed to fuse these response maps effectively. In the proposed response map fusion strategy, the peak-to-sidelobe ratio, which measures the peak strength of the response, is utilized to weight each response, thereby filtering the noise for each response and improving final fusion map. Eventually, the fused response map is used to accurately locate the object. Qualitative and quantitative experiments on 123 challenging UAV image sequences, i.e., UAV123, show that the novel tracking approach, i.e., OMFL tracker, performs favorably against 13 state-of-the-art trackers in terms of accuracy, robustness, and efficiency. In addition, the multi-feature learning approach is able to improve the object tracking performance compared to the tracking method with single-feature learning applied in literature.

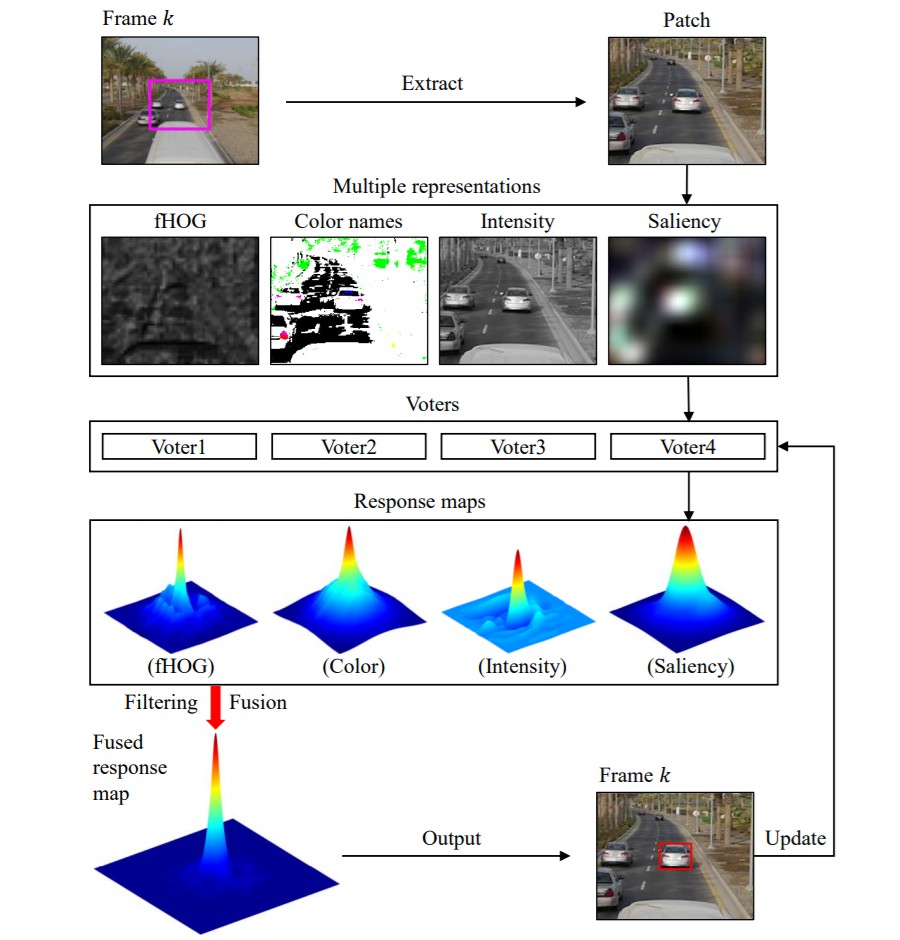

Fig. 1 Main structure of the proposed tracking approach.

Fig. 1 Main structure of the proposed tracking approach.