Abstract

Siamese object tracking has facilitated diversified applications for autonomous unmanned aerial vehicles (UAVs). However, they are typically trained on general images with relatively large objects instead of small objects observed from UAV. This gap on object scale between the training and inference phases is prone to suboptimal tracking performance or even failure. To solve the gap issue and tailor general Siamese trackers for UAV tracking, this work proposes a novel scale-aware domain adaptation framework, i.e., ScaleAwareDA. Specifically, a contrastive learning-inspired network is proposed to guide the training phase of Transformer-based feature alignment for small objects. In this network, feature projection module is designed to avoid information loss of small objects. Feature prediction module is developed to drive the aforementioned training phase in a self-supervised way. In addition, to construct the target domain, training datasets with UAV-specific attributes are obtained by downsampling general training datasets. Consequently, this novel training approach can assist a tracker to represent objects in UAV scenarios more powerfully and thus maintain its robustness. Extensive experiments on three authoritative challenging UAV tracking benchmarks have demonstrated the superior tracking performance of ScaleAwareDA. In addition, quantitative realworld tests further attest to its practicality.

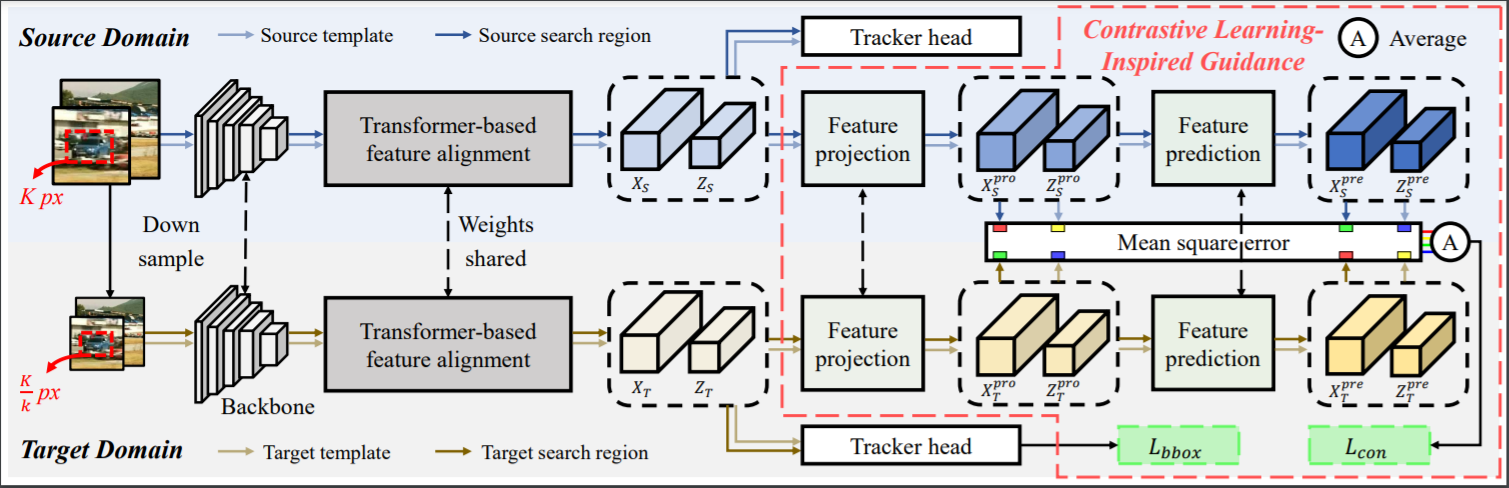

Overview of our ScaleAwareDA.

Overview of our ScaleAwareDA.